Social media giant Meta removed three foreign influence operations from the Facebook platform during Q3, 2023. It designates such operations as coordinated inauthentic behavior (CIB). Two were Chinese in origin, and one was Russian, the company says.

In each case, the purpose of the CIB was to influence public opinion by spreading false and/or misleading information. Overall, Russia, Iran, and China are the most prolific sources of foreign influence campaigns.

For one Chinese CIB, Meta removed 13 Facebook accounts and seven Groups. One of these Groups had attracted about 1,400 followers. False personas on both Facebook and Twitter posed as journalists, lawyers, and human rights activists.

Two clusters of fictitious personas targeted Tibet and the Arunachal Pradesh region of India. The Tibet cluster accused the Dalai Lama and his followers of corruption and pedophilia, while the second cluster accused the Indian government of corruption and supporting ethnic violence.

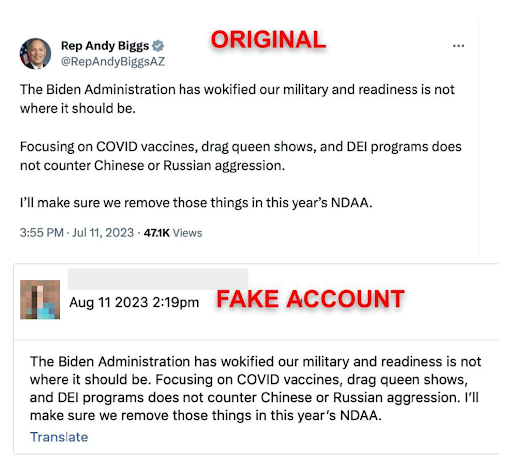

For the second Chinese CIB, Meta removed 4,789 Facebook accounts posing as Americans and attempting to influence US politics and US-China relations. This CIB was removed before it could effectively engage with authentic communities on the Meta platforms.

Both sides of the US political divide were criticized by this CIB. Methods would include copy-pasting from legitimate X posts, re-posting Musk posts, resharing legitimate Facebook posts, and linking to genuine mainstream media articles. The likely purpose was to appear more authentic.

For the Russian CIB, Meta removed six Facebook accounts, one Page, and three Instagram accounts. This CIB targeted English-speaking audiences, and primarily talked about the war in Ukraine. It was supported by fictitious ‘media’ brands on Telegram, which were in turn promoted by Russian embassies and diplomatic missions on Facebook, X, and YouTube.

The Russian CIB accused Ukraine of war crimes, and Ukraine’s supporters of ‘Russophobia’. But it also made critical comments about transgender and human rights; and criticized Biden and Macron – while praising Russian and criticizing French activity in West Africa.

The group garnered around 1,000 followers on Facebook, and 1,000 followers on Instagram.

The primary common factor in these operations is that they seek to influence opinion on current geopolitical situations. Meta expects such activity to increase in 2024 in response to upcoming elections in America and Europe. Russian and Iranian campaigns have been found in previous US election cycles (although they were not considered to be sufficient to affect election results). In 2024, Meta expects the volume of elections-related content, including influence campaigns, to scale dramatically with gen-AI.

Gen-AI is a double-edged sword. While it will increase adversarial activity, it also helps detect such activity. “At this time,” comments Meta, “we have not seen evidence that it will upend our industry’s efforts to counter covert influence operations – and it’s simultaneously helping to detect and stop the spread of potentially harmful content.”

CIBs attempt to maintain or rebuild their networks after removal. Meta calls the latter recidivist behavior. “Some of these networks may attempt to create new off-platform entities,” reports Meta (PDF), “such as websites or social media accounts, as part of their recidivist activity.”

There is also a growing practice of decentralizing online activities to increase resilience to takedowns. Meta gives the Chinese ‘Spamouflage’ operation as an example. “It was seen running on 50+ platforms, and it primarily seeded content on blogging platforms and forums like Medium, Reddit and Quora before sharing links to that content on ours.”

Meta suspects that this decentralization of activity is a response to increasing pressure from the major platforms. Long running misinformation campaigns are becoming harder to maintain and more costly to operate. However, with the operations spreading across multiple platforms, Meta also suggests that the need for information sharing is increasingly important.

It is critical, says Meta, “to continue threat sharing across our industry and with the public so that all apps – big or small – can benefit from threat research by others in identifying potential adversarial threats.” Information sharing with and from government is also important. In 2020, Meta took down influence operations originating in Russia, Mexico, and Iran following a tip from law enforcement.

Related: WhatsApp Tightens Sharing Limits to Curb Virus Misinformation

Related: Russia’s Disinformation Efforts Hit 39 Countries: Researchers

Related: US Seizes Domain Names Used by Iran for Disinformation

Related: US Takes Down Iran-linked News Sites, Alleges Disinformation