If you believe that the 2020 Presidential election in the United States represented the worst kind of campaign replete with lies, misstated facts and disinformation, I have some news for you. You haven’t seen anything yet.

The rapid evolution of artificial intelligence (AI) and analytics engines will put campaign-year disinformation into hyperspeed in terms of false content creation, dissemination and impact. To prepare ourselves as a society to sift through falsehoods, deal with them appropriately and arrive at the truth, we need to understand how disinformation works in the age of AI.

This article describes the four steps of an AI-driven disinformation campaign and how to get ahead of them so that security teams can be more prepared to deal with – and seek the truth behind – advancing tactics of malicious actors.

The 4-Steps of AI-Driven Disinformation Campaigns

Step 1: Reconnaissance

In this initial step, threat actors perform data mining, sentiment analysis and predictive analytics to identify specific points of social leverage. They will use these leverage points to create virality, to carry out precision targeting of specific issues, groups and individuals and to segment their target audience(s) for optimal resonation of messages. It is literally akin to a professional marketing organization developing personas for persona-based marketing and sales.

Step 2: Content Creation

AI, particularly generative AI, helps threat actors to make more content, faster. Moreover, they can make content that appears far more realistic. The magic of AI here is that they can rapidly create highly credible multimedia, multilingual, coordinated, rapidly refreshed, and monitored content. Experts across every field are already concerned with such real-looking deep fakes. Commenting on a recent study that found that disinformation generated by AI may be more convincing than disinformation written by humans, Giovanni Spitale who led the study said “The fact that AI-generated disinformation is not only cheaper and faster, but also more effective, gives me nightmares”.

Another power of AI in content creation is that it can eliminate content reuse and other markers of fake content generated by humans. Targeted groups and individuals have traditionally been able to filter out the fake content, assuming they were on their guard for it. But with AI behind the scenes, the reuse and markers are gone, and threat actors can better weaponize all the new, real-looking content and better weaponize it.

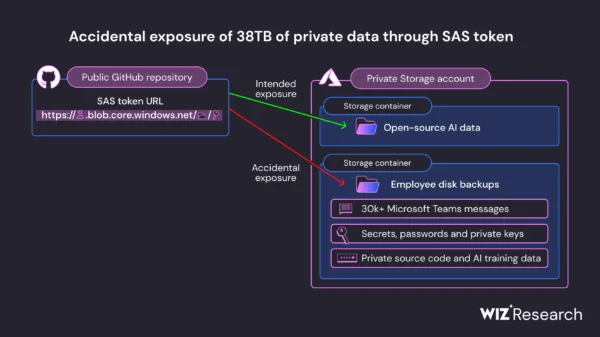

In many cases, threat actors already have data from successful hacking attacks. Using AI, they can subtly alter that data and the forms in which they present it. AI not only enables them to inject data into numerous forms of new content, but it also makes it happen at scales and speeds never before contemplated – beyond anything humans could ever do.

The tie-in to elections? Fake people voicing election opinions. Threat actors can instruct AI to sound like different types of Americans, across all segments of the population. For example, AI can easily create content to reflect the attitudes, opinions and vocabulary of a midwestern farmer if instructed to do so. It can then leverage the same data to create realistic content that would likely come from someone in Texas. AI is that flexible, and it can continue adjusting as it takes in more and more data, much of which people generate themselves on social media.

Step 3: Amplification

Amplification is, essentially, the act of getting as many people as possible to see your content on the different social media platforms. Leveraging analytics and AI, threat actors have the infrastructure they need to create an army of fake personas and characters that look real at the start but become progressively ‘more real’ over time as AI fills in their profiles with regular, credible content and back stories that are non-controversial. So, they become better able to orchestrate, appearing as truly authentic participants in online discussions about topics relevant to elections. Up until recently, that was not possible. Threat actors had to rely on taking images from services like ‘thispersondoesnotexist.com’ which are quite easy to identify. Many even used stock photos wherein anyone could simply perform a Google image search and find that the images were not actually linked to the persona being used.

Positioned with a new army of hybrid real-fake personas, threat actors will be ready to apply their army in an automatic and coordinated manner across the different social platforms. Posting, boosting and interacting with the previously generated content, they can persuade anyone who has been baited by it. This absolutely takes social campaigns to the next level.

Step 4: Actualization

Actualization in the context of AI-driven social campaigns involves gathering feedback from prior and ongoing efforts to further optimize content creation and amplification. Again, applying analytics and AI to the data gathered from their efforts, threat actors refine their content to make it more credible and more targeted. The feedback loop can track people’s reactions to shared content to gain higher-precision insights that allow for the following:

- More granular segmentation of target audiences

- Identification of the types of content that have greater impact (by audience type)

- Pinpointing the social platforms that best amplify certain content types

- Ideation for new personas never before considered

Creative minds that become experts at prompting AI engines with ever more targeted questions about social campaigns and the data they produce can devise endless scenarios, and AI can inform them how best to approach each scenario strategically and tactically. As a result, actualization becomes continuously more impactful.

What to Do about Our New Reality

In my last two pieces for SecurityWeek, I predicted the mainstreaming of AI-powered disinformation and how security teams can preemptively address disinformation campaigns, including the importance of “pre-bunking” to strategically and psychologically prepare people for the effects of disinformation campaigns.

My hope is that this mini-series on AI and disinformation will provide a collective picture on what security teams across industries need to prepare for, and strategically prioritize, this year as major events like the 2024 election cycle come into full swing.