With the recent presidential Executive Order, and the AI Safety Summit being held at Bletchley Park in the UK, developments in artificial intelligence continue to command headlines around the world.

The recent rapid rise of artificial intelligence continues to be a game-changer in many positive ways, even though we are still touching the very fringes of its potential. New and previously unimaginable medical treatments, safer, cleaner and more integrated public transport, more rapid and accurate diagnoses, and environmental breakthroughs are all within the credible promise of AI today. Yet, within this revolution, a shadow looms.

Both China and Russia have made no secret of their desire to “win the AI race” with current and pledged investments ranging from hundreds of millions to billions of dollars in AI research and development. While companies like OpenAI, IBM and Apple might be top of mind when asked to name the major players in artificial intelligence, we should not forget that for every Amazon there’s an Alibaba, for every Microsoft a Baidu, and for every Google a Yandex. It is inevitable that states, activists, and advanced threat actors will leverage the power of AI to turbocharge disinformation campaigns.

AI’s growth has paved the way for innovative approaches to spread mis and disinformation online. From fabricating fake cyberattacks and disrupting incident response plans, to manipulating data lakes used for automation, AI-powered disinformation campaigns can expose or wreak havoc on established security systems and processes. Imagine an exponential increase in the volume and quality of fake content, the creation and automation of armies of AI-driven digital personae, replete with rich and innocent backstories to disseminate and amplify it, and predictive analytics to identify the most effective points of emotional leverage to exploit to create confusion and panic.

This trend poses a significant threat to cybersecurity practitioners, challenging security teams in addressing emerging techniques where AI is utilized to deceive, manipulate, and create chaos. A post-truth society requires a post-trust approach to truth.

The implications of AI-driven disinformation techniques are manifold and include:

- Undermining Incident Response Plans: By fabricating false external incidents or simulating cyberattacks, threat actors using AI can mislead security teams, leading to misallocation of resources, confusion in response procedures, and exposing or compromising the efficacy of incident mitigation strategies.

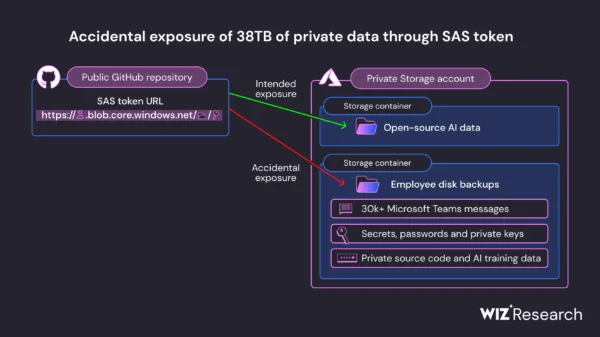

- Manipulating Data for Misinformation: AI can be leveraged to tamper with data lakes used for automation. By injecting false data, generating volumes of poisoned data, or manipulating existing information, threat actors can compromise the integrity and reliability of data-driven decision-making processes, leading to incorrect conclusions or faulty automation. If falsified data infiltrates these systems, it can undermine the reliability and integrity of automated processes, leading to catastrophic results.

- Erosion of Trust and Confidence: The dissemination of AI-fueled disinformation erodes trust in information systems and diminishes confidence in the accuracy of data and security measures. This can have profound consequences, affecting not just technological systems but also undermining public trust in institutions, companies, and overall cybersecurity infrastructure.

Challenges abound for security teams in combating these AI-driven disinformation campaigns, and the sophistication of AI tools poses a significant hurdle too. The advancement in AI technology enables threat actors to create highly sophisticated and realistic disinformation campaigns, making it challenging for security systems to distinguish between genuine and fabricated information. It’s akin to finding a needle in a haystack, exacerbated by the speed at which AI techniques evolve. As AI techniques evolve rapidly, security teams will need to adapt just as swiftly to the ever-changing landscape, requiring continuous learning, development of new defense mechanisms, and staying abreast of the latest AI-driven threats.

The current absence of comprehensive regulatory frameworks and standardized practices for AI, with particular reference to cybersecurity, leaves a void, making it difficult to mitigate the misuse of AI in disinformation campaigns.

To counter these threats, security teams must adopt increasingly innovative strategies. AI-powered defense mechanisms, such as employing machine learning algorithms that can discern and neutralize malicious AI-generated content, are essential. AI tools can ingest and make sense of the huge and disparate volume of data that characterize the entirety of an organization, establishing a reasonable baseline and alerting on potential manipulation. AI offers perhaps the best opportunity for building effective data integrity models capable of operating at such a scale. Equally, AI could serve as an external sentinel, monitoring for nascent content, activity or sentiment and extrapolating out the likely or potential threats to your business.

Consider how your defenses can benefit from AI-powered data collection, aggregation, and mining capabilities. Just as a potential attacker starts with reconnaissance, the defender can do the same. Ongoing monitoring of the information space surrounding your organization and industry could serve as a highly effective early warning system.

Education and awareness also play a pivotal role. By continuously training and updating security professionals about the latest AI-driven threats, they are better equipped to adapt to evolving challenges. Collaboration within the cybersecurity community is crucial here—sharing insights and threat intelligence creates a united front against these ever-adapting adversaries, and cultivating critical thinking skills enables security teams to more effectively identify and stop disinformation campaigns.

Maintaining constant vigilance and adaptability is another key to countering these threats. Learning from past incidents such as the manipulation of public opinion through social media misinformation campaigns underscores the need for an agile approach, constantly updating protocols to counter emerging threats effectively. Part of the effectiveness of disinformation comes from its “shock factor”. Fake news can be so serious, and the danger appears so imminent that it may cause people to react in a less coordinated way, unless they are prepared in advance for the situation. This is where doing some “pre-bunking” of the type of disinformation your business is most likely to be hit by can be extremely helpful. This will help your employees mentally prepare for certain anomalies and be better prepared to take the appropriate next steps.

Security leaders should initiate conversations across IT, OT, PR, Marketing, and other internal teams to make sure they know how to collaborate effectively when disinformation is discovered. A simple example of this could be incorporating disinformation exercises into tabletop discussions or periodic team trainings.

While offering seemingly endless possibilities, AI also exposes us to new vulnerabilities. The rise of AI-powered disinformation presents an immense challenge to society’s ability to discern fact from fiction. The fight back demands a comprehensive approach. By embracing a strategy that combines technological advancements with critical thinking skills, collaboration, and a culture of continuous learning, organizations can more effectively safeguard against its disruptive effects.