Use of open source software continues to grow and is now expanding into the burgeoning AI market. Two new products aim to secure the traditional OSS supply chain, and the new AI model software supply chain.

The open source software (OSS) supply chain is an enduring threat showing no sign of imminent solution. SBOMs are one approach, but there are doubts over whether SBOMs can solve the unique problems of OSS. This means companies must take their own proactive steps to secure their own open source software supply chain.

Two new products offer solutions: Venafi’s Stop Unauthorized Code Solution, and Protect AI’s Guardian. Each tackles a specific section of the OSS issue — the former focuses on the traditional OSS market, while the latter tackles the new and growing open source machine learning model market.

Venafi already provides a machine identity solution. This includes CodeSign Protect, which ensures that imported code has not been compromised. CodeSign Protect can be directed against the OSS supply chain, but is only part of a complete solution. While the OSS code’s integrity can be assured and it can be authorized to run, business needs to ensure that other unauthorized code is blocked. This adds the not insignificant ability to block undetected malware as well as unauthorized OSS.

The firm has now combined CodeSign Protect and the CodeGuard Service as a new product under the generic title of the Stop Unauthorized Code Solution. It can be used to allow good code, even from an OSS source, while stopping bad code that may be undetected by intrusion detection systems. “This process is crucial because if intrusion detection systems can’t spot hidden malicious code, your company might be at a higher risk of malware attacks, zero-day exploits and the like,” says Venafi in a blog announcing the new product.

CPO Shivajee Samdarshi adds, “Venafi’s industry-first Stop Unauthorized Code Solution helps security teams tackle this growing challenge by stopping unauthorized code in its tracks – and effectively hardens systems and networks.” It confirms the authenticity of authorized OSS software – allowing it to run – and blocks unauthorized OSS and potentially malicious code. It operates across all environments, from cloud native such as Kubernetes to environments such as Windows, Linux, Apple, and Android.

Protect AI’s Guardian product is designed to solve a newer and rapidly expanding open source threat: machine learning models. “The growing democratization of Artificial Intelligence and Machine Learning (AI/ML) is largely driven by the accessibility of open source ‘Foundational Models’ on platforms like Hugging Face,” explains the firm. “These models, downloaded millions of times monthly, are vital for powering a wide range of AI applications. However, this trend also introduces security risks, as the open exchange of files on these repositories can lead to the unintended spread of malicious software among users.”

Hugging Face is not the only source of ML models, but is the best known. Putting this in context, with its own figures, ‘The Hugging Face Hub is a platform with over 120k models, 20k datasets, and 50k demo apps (Spaces), all open source and publicly available, in an online platform where people can easily collaborate and build ML together.’ Protect AI has a blog analyzing the Hugging Face repository.

The threat from ML Model open source code parallels the traditional OSS supply chain threat. “There are thousands of models downloaded millions of times from Hugging Face on a monthly basis, and these models can contain dangerous code,” comments Ian Swanson, CEO of Protect AI.

If one of these open source models has been maliciously modified, the downloader is exposed to a potential model serialization attack. “This occurs when malware code is added to the content of a model during serialization (saving) and before distribution – creating a modern version of the Trojan Horse,” explains the firm.

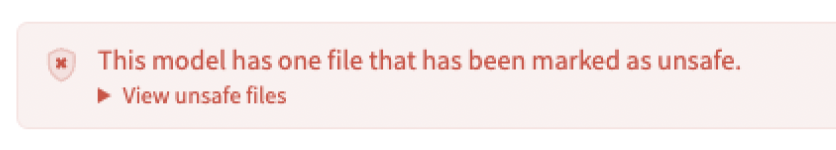

Like all major open source code repositories, Hugging Face attempts to secure the shared code. For example, it uses a version of picklescan to evaluate uploaded pickle files. Pickle is known to be unsafe, but is widely used because it is a convenient and flexible way to share models. Sometimes, a warning banner is displayed:

But the warning does not prevent a user from pulling and loading a model programmatically. The user doesn’t see the warning, and potentially unsafe code may be downloaded and used.

Like Venafi, Protect AI already has part of its new solution: ModelScan, an open source tool for scanning AI models launched last year. “To date, over 3,300 models were found to have the ability to execute rogue code,” says the firm. “These models continue to be downloaded and deployed into ML environments, but without the security tools needed to scan models for risks, prior to adoption.” ModelScan alone is better described as an aid than a solution.

Protect AI’s new Guardian solution uses the firm’s scanners to verify the code, but then further acts as a secure gateway. “With advanced access control features and dashboards, Guardian provides security teams control over model entry and comprehensive insights into model origins, creators, and licensing,” claims the firm.

While Venafi has expanded its products to better secure the traditional OSS threat vector, Protect AI has expanded its products to better secure the new and developing open source AI code vector.

Learn more about OSS security at SecurityWeek’s Supply Chain Security Summit

Related: SBOMs – Software Supply Chain Security’s Future or Fantasy?

Related: Top 10 Security, Operational Risks From Open Source Code

Related: 1,300 Malicious Packages Found in Popular npm JavaScript Package Manager

Related: AI’s Future Could be Open-Source or Closed. Tech Giants Are Divided as They Lobby Regulators