Bring your own AI to the SOC is an existing and potentially growing threat to organizational data.

Two new surveys examine what it takes to make a successful SOC. Both surveys stress the need for automation and artificial intelligence (AI) – but one survey raises the additional specter of the growing use of bring your own AI (BYO-AI).

The surveys are from Cybereason and Devo. Cybereason surveyed (PDF) 1,203 security analysts in firms with more than 700 employees, via Censuswide. Devo used Wakefield Research to query 200 IT security professionals specifically from larger organizations with more than $500 million in annual revenue.

Cybereason cites ransomware as a key driver of the need for speed within SOCs – a need that can only be satisfied by increased automation. Forty-nine percent of its respondents reported that ransomware is the most common incident type they must deal with daily. (Forty-six percent selected supply chain attacks.)

The problem, however, is not so much the type of incident that needs to be resolved, but the volume received and the time to resolution. More than one-third of the respondents reported receiving between 10,000 and 15,000 alerts every day. Fifty-nine percent of respondents said it takes between two hours to one day to resolve a ransomware incident, while 19% said it takes three to seven days.

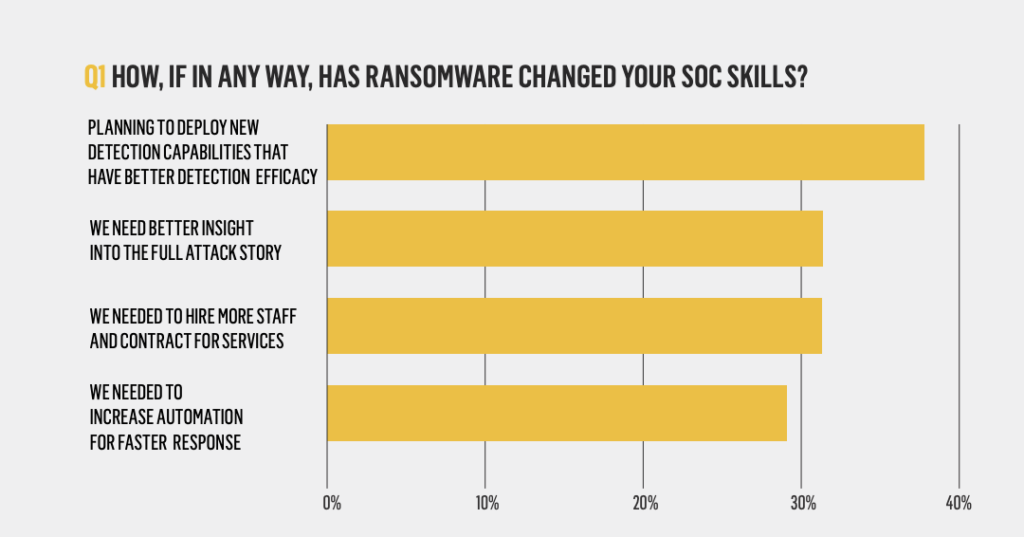

Clearly, these figures don’t reconcile – and finding the genuine attack in the haystack is a serious problem for SOC analysts. In the Cybereason survey, ransomware is causing 38% of respondents to look for better and more accurate detection, while 29% say it has increased their need for automation and faster response times.

Interestingly, ‘artificial intelligence’ or AI is not mentioned in this survey beyond Cybereason’s self-advertisement: “Only the AI-driven Cybereason Defense Platform provides predictive prevention, detection and response that is undefeated against modern ransomware and advanced attack techniques.”

The Devo survey (PDF) also highlights analysts’ recognition of the need for automation, although no specific threat types are mentioned. Forty-one percent of respondents expect investment in automation to increase by 6% to 10% over this year, while 14% expect the increase to be between 11% and 20%. Fifty-four percent expect the increase to help in incident analysis, while 53% expect an improvement in threat detection and response.

However, it is equally clear that analysts are not satisfied with current automation solutions. Forty-two percent cite limited scalability and flexibility, while more than one-third are concerned about the high cost of implementation and maintenance, the difficulty of integration with existing systems, and the lack of internal expertise and resources to manage the automation.

The Devo survey also examines the use of AI. The area where usage is primarily expected to increase is incident response (from 28% to 37%), although this remains well below AI’s use for IT asset inventory management (down from 79% to 58%). Worryingly, 100% of the respondents believe that malicious actors are better at using AI than their own organization.

Equally worryingly, 100% of the respondents admit they could be motivated to use AI tools not provided by their company. Reasons include ‘easier with a better interface’, ‘more advanced capabilities’, and they ‘allow me to do my work more efficiently’. In fact, 80% of the respondents admit to having already used an AI tool not supplied by the company (increasing to 96% when ‘not me, but someone I know’ is included). This is despite the belief (78%) that the company would prevent the activity if they knew about it.

The current situation is analogous to the BYOD issue that exploded as a concern a decade ago, but has slowly been absorbed, accepted, and contained by CISOs. Whether the same process will apply to BYO-AI remains to be seen – but just as the early days of BYOD brought new dangers, so do these early days of BYO-AI.

The survey doesn’t indicate which AI tools are being used privately, but generative pre-trained transformers (as in ChatGPT) or large language models (LLMs)are the most obvious candidates. Devo told SecurityWeek that other tools could include Beautiful.ai, Lumen5, Brandmark.io, and Copy.ai for communication and marketing needs.

“This doesn’t necessarily mean CISOs need to implement total bans [on BYO-AI],” said Devo. “Executive teams must be made aware of the use cases, and the associated risks with those use cases, and then make an informed decision as to whether to accept the risk of utilizing these tools in their organizations.”

For now, however, in these early days of BYO-AI, there is risk. “By ‘rogue AI’ we are talking about AI being used without approval or awareness of the internal security, legal, and procurement teams – those responsible for due diligence. Using any system or application without having the proper reviews in place puts a company at risk,” said Devo CISO and ICIT fellow Kayla Williams.

She likens the situation to visiting a restaurant where you see a clean, white tablecloth and an immaculate front of house/dining room. What you don’t see is the kitchen, which may be full of rodents and cockroaches and mishandling of food. “Without proper due diligence being conducted by the systems and apps and the companies that make them, you cannot be sure of what you are getting,” she said.

“Companies produce some slick technologies, but then utilize the data you entrust them with in nefarious ways, selling it for profit or processing it in ways incompatible with what you have agreed to,” she added. “This adds not only a threat to the user who has provided their data, but also to the company’s data that is being uploaded, potentially impacting their compliance posture, both with customers and with regulators.”

The Devo survey shows that BYO-AI already exists as a potential problem. With the growth in hybrid and remote working, including SOC analysts, BYO-AI is likely to increase. Noticeably, the NCSC (part of the UK’s GCHQ), has warned in a blog published March 14, 2023, “There are undoubtedly risks involved in the unfettered use of public LLMs… Individuals and organizations should take great care with the data they choose to submit in prompts. You should ensure that those who want to experiment with LLMs are able to, but in a way that doesn’t place organizational data at risk.”

Related: ChatGPT Integrated Into Cybersecurity Products as Industry Tests Its Capabilities