Careful Design of Network and System Security Architecture Can Substantially Enhance Security

Detection & Response are often positioned as competing with Isolation & Prevention. While these classes of security solutions often approach the problem in radically different ways, there can be synergies which allow them to significantly reinforce each other. Both are needed because there will always be some path for an attacker to penetrate any organization. As we saw with Stuxnet, even multiple layers of airgap is no assurance of safety. Once penetrated it is critical for organizations to be able to discover the intruders, expel them, and start cleanup.

However, in most cases the victim organization is not the only target the hackers might have attacked. Given a set of potential targets they, as rational actors, will generally go after the easiest and least defended. Here Isolation and Prevention really shine by forcing hackers to adopt a much more difficult path of attack. In this case an organization can avoid most attacks by simply being a significantly harder target than other similar potential targets. It is a classic case of not having to outrun the bear, but only outrunning the other hikers. A sound security strategy needs to keep both strategies in mind for a unified security architecture.

Incompleteness is the biggest problem for Detection based security solutions. Recent studies show that the vast majority of malware is unique to the endpoint where it was found. The code is being transformed to evade detection every time it is delivered to a new host. Additionally, high value targets are attacked with zero-day exploits the likes of which have never been seen at all by the detection tools. Detection also has a hard time with “low and slow” attacks because they are able to evade many anomaly and network based detection systems by fitting in with existing patterns of activity and avoiding any spikes or bursts which could attract attention.

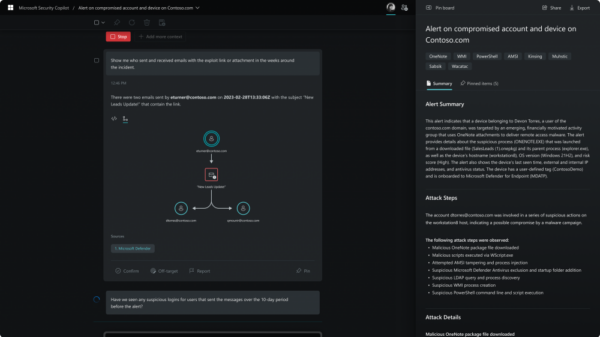

It is critical for Detection to happen quickly. By the time attackers are detected in major breaches they have been in the victim network for months. Catching the attack in seconds can allow quick and relatively painless remediation compared to the massive effort if they have been there for months.

A growing area for Detection is looking at the attacker’s end objective. Often the purpose of an attack is to steal a trove of documents, or a database of financial records. Watching those systems for unusual patterns of activity, even if fully authorized from apparently clean systems, should set off alarms. The failure to detect the massive file dumps by Snowden and Manning show the importance of this kind of detection.

Even if detection of the attacker is successful, it can be very difficult to respond effectively.

Particularly after an extended presence in an organization, it may be almost impossible to know what systems have been touched by the hackers and whether they have actually been successfully expelled from the network. Businesses often revert to a scorched earth policy where all systems are assumed to be compromised and must be totally wiped and set up again in a new network. The other option is to play whack-a-mole with the attackers hoping to eliminate all of their footholds before they can move on to new systems. With this approach the defenders are reduced to hoping that they know how to identify the traces of the attacker and clean up all the systems, but that is always in doubt. Either way, recovery from a significant intrusion is hugely resource intensive and requires highly skilled and experienced teams. Even quickly re-imaging compromised desktop computers can consume an inordinate amount of IT resources and cause significant work disruption for the affected employees.

Ideally one would like to prevent hacker intrusions in the first place. While it is impossible to block off all possible paths into an organization’s networks, it can be done for the most vulnerable and commonly exploited routes. Historically we have tried to accomplish this with real time detection of attacks, scanning everything coming in to block hostile content before it can touch and servers or endpoints. Unfortunately, this has been turned out to be both difficult and generally ineffective. A more promising approach to preventing attacks is to disarm infected files before allowing them through to the endpoint. Many vendors are now providing tools that either translate incoming data files or rebuild them in a way that is almost certain to remove any malicious content. There are two problems with these systems however. First, they often remove important active content from the files like macros in spreadsheets, or break particularly complex documents so they don’t render properly. The second is that these solutions can’t disarm a file in the milliseconds required for protecting web connections.

The most promising solution right now seems to be application isolation. It can provide effective protection when using inherently vulnerable applications, like web browsers, which contain innumerable potential exploits and are exposed to direct attack.

Isolation can prevent damage from attacks in two different ways. First, by putting only the isolated application in the isolating container, attackers are prevented from accessing other computer and network resources to actually penetrate and impact their targets. There is very little damage that can be done from within these isolating containers. Second, the box is typically almost empty, preventing the attacker from being able to capture files, data, and other sensitive information. Only the content currently being processed by the isolated application needs to live in that box, limiting the attacker to capturing only that one thing. The combination of a small and empty box creates a powerful preventative security control.

Isolation can also contribute to the effectiveness of detection tools. The small empty box enables this by creating a very simple and well defined environment. General purpose computers have countless applications and files and could be engaged in almost any activity. It is difficult to know what behavior should be allowed or not. The isolated environment contains only a single application which is highly constrained. Any unexpected process, activity, or file is a clear sign of malicious activity. The simplicity of the environment makes detection of the attack much easier and more effective.

Isolation can also address the problem of having to detect attacks in real time. Consider isolated browsers. Files still need to flow to the application in real time, but their movement from the isolated environment to the desktop does not need to be instantaneous. There is time to do detailed inspections and detonations of the files before allowing them to move outside of the secure isolated box. This allows for a much more effective detection process. Additionally, there is time to disarm malicious files providing an additional level of prevention.

Careful design of network and system security architecture can substantially enhance security. As Bruce Schneier observed, sensitive data needs to be treated as a toxic asset — the best protection is to not keep it at all because what does not exist cannot be stolen. Realistically, most businesses do need to keep a great deal of valuable data which nee

ds to be protected. Completely isolating the data from network connected systems would be the next best choice. Where that is not possible, it can be kept isolated from web servers and other exposed and vulnerable attack surfaces. Without effective isolation, a breach of the servers can lead directly to complete compromise of the data.

Detection, Response, Prevention, and Isolation should not be considered separately, but rather woven together into a tightly meshed fabric of security. When properly integrated, they reinforce each other, each making the other more effective. They provide strong defense for each other’s vulnerable flanks.