Malicious automatic web application access is one of the most relevant dangers in the current web application’s threatscape. Automated tools can be used for many malicious purposes, such as scraping the web application’s content, spamming, and application-level DoS attacks. More specifically, attackers may use automated tools to post comments in blogs and forums, create fake accounts, retrieve mailing lists, and advertise products.

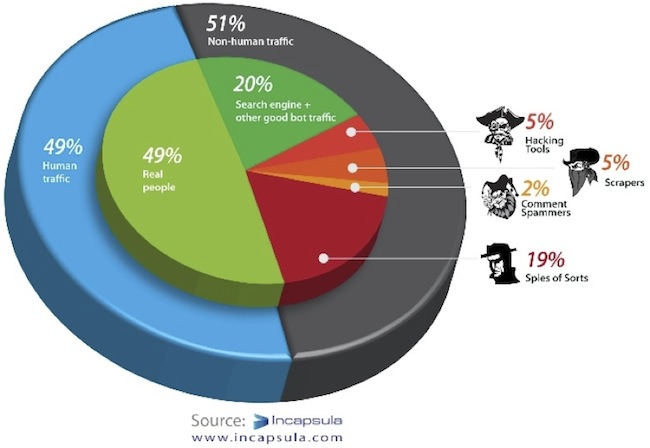

On avergae there’s more automated traffic than human originated

CAPTCHA (an acronym of “Completely Automated Public Turing test to tell Computers and Humans Apart”) is one of the most common security measures used today against automated attacks. In general, a CAPTCHA is a test intended to distinguish human users from automated browsing applications, and thus prevent automated tools from abusing online services. CAPTCHAs do so by asking users to perform a task that is quick and simple for humans and, ideally, impossible for automated software. For example, CAPTCHA implementations assume it’s easy for a human and difficult for a computer to recognize textual content in a noisy image, or likewise, recognize spoken words in a noisy audio recording. Thus, hackers who wish to use automated tools for their malicious purposes must break (solve) or bypass the CAPTCHA mechanism in order to be permitted into the site.

The CAPTCHA solution is already highly criticized for hindering the user experience while not providing a good enough security solution, as any CAPTCHA can be bypassed by outsourcing it to human solvers for a very low cost .

In this column I would like to highlight a novel security reason for limiting the use of CAPTCHA. The frequent use of CAPTCHA trains the web application’s users to fulfill any arbitrary task the CAPTCHA orders them to do without further hesitation. Attackers can use that conditioning to launch attacks that require some victims’ interaction, reasoning the interaction as being part of a CAPTCHA.

Interactive attacks disguised as CAPTCHA

Browsers make a visual distinction between links to pages their users have already visited, and links to pages their users have not yet visited. CSS allows page authors to control the appearance of this distinction. Unfortunately, that ability, combined with Javascript’s ability to inspect how a page is rendered, used to expose Web users’ browsing history to any site that cares to test a list of URLs that they might have visited. To mitigate this risk, browsers have blocked Javascript from accessing the links’ appearance information.

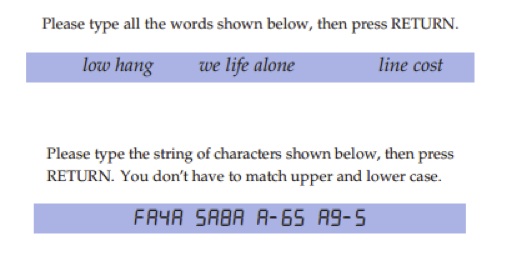

However, in 2011 researchers showed (PDF) that by tricking victims into interacting with the attacker they might inadvertently expose their history information. That interaction was cleverly disguised as CAPTCHA, where the appearance of certain words depends on whether the victims have visited a certain page in the past. Although the attackers cannot access that information directly via Javascript, if the victims tell them what words they can see as the answer of that CAPTCHA they indirectly disclose that information to the attacker.

CSS history sniffing attack disguised as CAPTCHA

In another recently published attack, security researcher showed that he can create a page that automatically downloads an arbitrary executable to user’s computer. The last barrier from running that potentially hazardous content on the victims’ computer is a dialog window with the options to Run, Save, or Cancel.

However, the attacker can overcome this obstacle by hiding that confirmation window behind a webpage. The attacker website displays a CAPTCHA which prompts the user to press the letter “R,” but it’s actually being sent to the confirmation window where “R” is the shortcut for Run.

Fighting automation with CAPTCHA alternatives

Even if CATPCHA is a flawed solution, it does not change the fact that automation is still a very relevant problem. Therefore application’s owner should augment their current CAPTCHA based anti-automation protections with some non-customer-facing anti-automation protections to reduce negative user experience and the dangers of interactive attacks. CAPTCHA should be used scarcely and as the final anti-automation measure, and not as the first and only anti automation measure.

Such alternatives are traffic-based automation detection, based on analyzing the anomalous behavior of automatic tools compared to humans such as excessive rate, or anomalous behavior of automatic scripted tools compared to browsers such as missing HTTP headers based detection.

Additionally, community based reputation information should be used, as automation attacks are inherently constantly using the same resources (IP addresses, usernames, email addresses etc.) for their attacks. By sharing the commonly used resource information across different applications, the attackers can be identified and stopped.