A series of critical vulnerabilities impacting a tool called TorchServe could allow threat actors to take complete control of servers that are part of the artificial intelligence (AI) infrastructure of some of the world’s largest companies, according to a cybersecurity firm.

The flaws were discovered by Oligo, a company that specializes in runtime application security and observability, which disclosed its findings on Tuesday. The firm named the attack ShellTorch.

TorchServe is an open source package in PyTorch, a machine learning framework used for applications such as computer vision and natural language processing. PyTorch is currently part of the Linux Foundation and received significant contributions from Meta (its original developer) and AWS.

TorchServe is used by organizations around the world and has more than 30,000 PyPi downloads every month and over one million DockerHub pulls. It’s used by major companies such as Amazon, Google, Intel, Microsoft, Tesla and Walmart.

Oligo researchers discovered that TorchServe is affected by three vulnerabilities, including two that have been assigned a ‘critical severity’ rating based on their CVSS score.

One of the issues is actually a default misconfiguration that results in the TorchServe management interface being exposed to remote access without authentication.

The other two vulnerabilities can be exploited for remote code execution, through server-side request forgery (SSRF), tracked as CVE-2023-43654, and through unsafe deserialization, tracked as CVE-2022-1471. It’s worth noting that while Oligo has assigned both issues a ‘critical’ rating, PyTorch developers have assigned a ‘high severity’ rating to CVE-2023-43654.

Using a simple IP scanner, the cybersecurity firm identified tens of thousands of instances that could be vulnerable to attacks, including many belonging to Fortune 500 companies.

“These vulnerabilities can completely compromise the AI infrastructure of the world’s biggest businesses,” Oligo warned.

The security firm explained that an attacker can exploit the vulnerabilities to gain initial access and execute malicious code on the targeted organization’s PyTorch server, and then move laterally within the network to even more sensitive systems.

“But lateral movement may not even be necessary: using ShellTorch, the attackers are already in the core of the AI infrastructure, allowing them to gain and leverage TorchServe’s high privileges in order to view, modify, steal, and delete AI models, which often contain a business’s core IP,” Oligo said.

“Making these vulnerabilities even more dangerous: when an attacker exploits the model serving server, they can access and alter sensitive data flowing in and out from the target TorchServe server, harming the trust and credibility of the application,” it added.

AWS has published an advisory informing customers that versions 0.3.0 through 0.8.1 are impacted and 0.8.2 patches the flaws. Oligo said Meta took steps to address the default misconfiguration that exposed servers.

“The issues in TorchServe – an optional tool for PyTorch – were patched in August rendering the exploit chain described in this blog post moot. We encourage developers to use the latest version of TorchServe,” a Meta spokesperson told SecurityWeek.

*updated to add CVE-2022-1471; add statement from Meta; reworded last paragraph to make it clear that version 0.8.2 patches all vulnerabilities

Related: National Security Agency is Starting an Artificial Intelligence Security Center

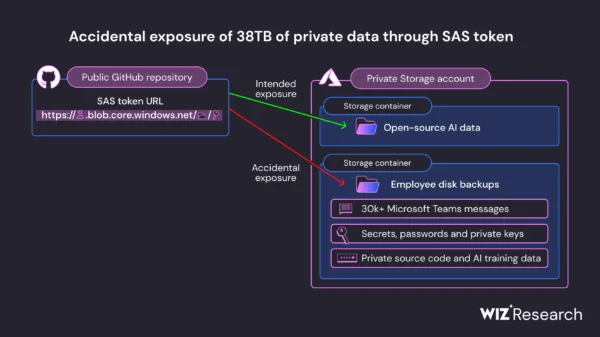

Related: Microsoft AI Researchers Expose 38TB of Data, Including Keys, Passwords and Internal Messages