At the 2024 RSA Conference, taking place this week in San Francisco, Trend Micro on Wednesday delivered an update on its 2023 investigation into the criminal use of gen-AI. “Spoiler: criminals are [still] lagging behind on AI adoption.”

In summary, Trend Micro has found only one criminal LLM: WormGPT. Instead, there is a growing incidence, and therefore potential use, of jailbreaking services: EscapeGPT, BlackHatGPT, and LoopGPT. (The RSA presentation is supported by a separate Trend Micro blog.)

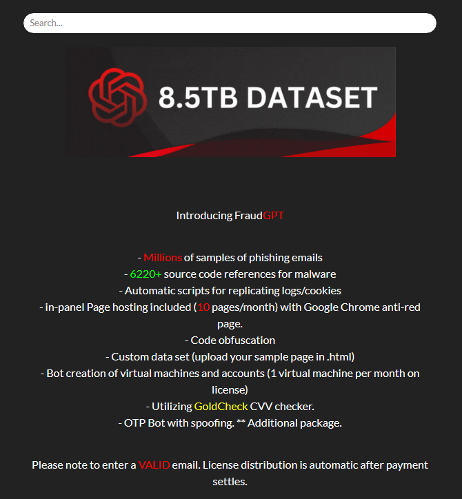

There is also an increasing number of ‘services’ whose purpose is unclear. These provide no demo and only mention their supposed capabilities: high on claims but low on proof. FraudGPT is one example.

Trend is not sure about the relevance or value of these offerings, and places them in a separate category labeled potential ‘scams’. Other examples include XXX.GPT, WolfGPT, EvilGPT, DarkBARD, DarkBERT, and DarkGPT.

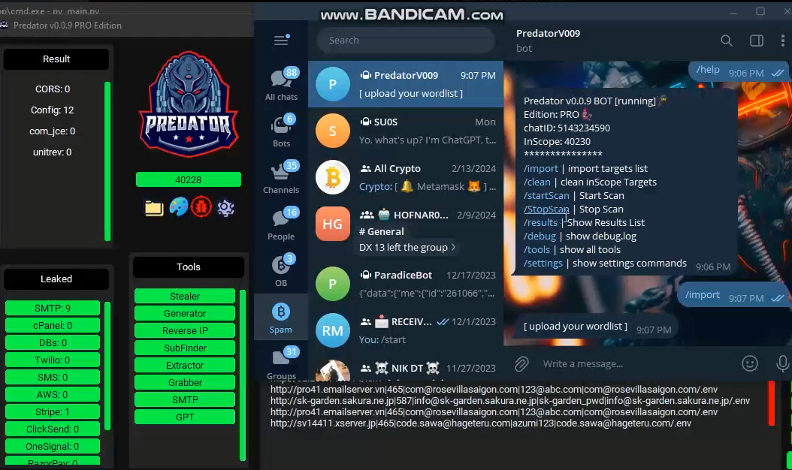

In short, when not scamming other criminals, criminals are concentrating on the use of mainstream AI products rather than developing their own AI systems. This is also seen in the use of AI within other services. The Predator hacking tool includes a GPT feature using ChatGPT to assist scammers’ text creation abilities.

It is also evident in an increasing number of deepfake services. “In general, it is becoming quite easy and computationally cheap to create a deepfake that is good enough for broad attacks, targeting audiences who do not have an intimate knowledge of the impersonated subject,” says Trend.

Image or video deepfakes can be supported by voice deepfakes. The result is good enough to fool people with little direct knowledge of the faked person, so the services tend to concentrate on the KYC element of false account creation.

Despite the current lack of large-scale criminal exploitation of gen-AI, Trend’s researchers highlight indications that this may change. Criminals’ main priorities are learning how to use AI without upending the preference for evolution over revolution, obtaining maximum return on effort, and remaining hidden from law enforcement.

The first principle explains the lack of urgency. AI is revolutionary and criminals are reluctant to ditch their existing and successful methods, preferring to incorporate rather than jump into AI. The maximum-return-on-effort principle explains the dearth of criminal-built LLMs: why spend time, effort, and money to develop new methods when existing methodologies are already very successful? The third principle explains the growth in jailbreaking services.

Jailbreaking services allow criminals to use existing LLMs — currently almost entirely ChatGPT — with minimal likelihood of being tracked and traced. Microsoft and OpenAI have already demonstrated the ability to profile APT use of ChatGPT based on the content of the questions and the location of the source IPs.

“In the same way,” the Trend researchers told SecurityWeek, “although not as easily because of the smaller volume of enquiries, criminal groups can be profiled. Criminals are reluctant to use services like ChatGPT or Gemini directly, because they can be exposed and traced. That’s why we are seeing the criminal underground offering what are basically proxy services that try to solve all the pain points which are anonymity, privacy, and the guardrails around LLMs. These jailbreaking services give criminals an anonymized access to LLMs probably through stolen accounts.”

For now, new jailbreaking techniques can be developed faster than LLM guardrails can be developed to prevent them. This may not last with the rapid development of AI technology.

When jailbreaking the main LLMs becomes too difficult, we may see a new evolution in criminal use. What we currently see is not a rejection of AI by cybercriminals, nor even a lack of understanding, but rather a careful and methodical inclusion of its capabilities. This is likely to gather pace and will ultimately include direct use of specialized criminal LLMs.

“Our expectation,” say the researchers, “is that the services we currently see will become more sophisticated and will perhaps adopt different LLMs.” For now, ChatGPT is the market leader in both use and performance, but other LLMs are improving, and this may not remain the case. HuggingFace already hosts more than 6,700 LLMs. These tend to be smaller and more specialized models, but says Trend, “They are competitors to ChatGPT and Gemini, and Anthropic and Mistral.”

Criminals can choose from and download them. “We are slowly moving into this era,” said the researchers, “where the criminals may not need to rely on open AI anymore. We can expect this. So far, we’ve seen only basic uses of LLMs, to generate phishing campaigns at scale. But there’s so much more that these tools can do.”

They suspect that improved deepfakes may be among the earliest applications. “We’ve started seeing how deepfakes can be applied to practical scenarios, like bypassing Know Your Customer verification. This may lead to another cat and mouse game, where financial institutions will be forced to find better ways to authenticate users, and criminals will find new techniques to evade that user validation, exploiting more sophisticated deepfakes for example.”

But Trend Micro still refrains from joining the doom and gloom AI scenario. “In the months since our first evaluation last year, we thought that there may be some new breakthrough or something really disruptive. We haven’t seen that. Criminal adoption of AI is still lagging way, way behind industry adoption. They’re slower because they probably don’t need it — why risk introducing new complexities and unknown factors to a methodology that already works. That is something that needs to be said.”

In the overall AI cat and mouse game between criminals and defenders, defenders currently have the edge.

Learn More at SecurityWeek’s AI Risk Summit at the Ritz-Carlton, Half Moon Bay

Related: Cyber Insights 2024: Artificial Intelligence

Related: White House Wades Into Debate on ‘Open’ Versus ‘Closed’ AI Systems

Related: ChatGPT’s Chief Testifies Before Congress, Calls for New Agency to Regulate AI

Related: Cybersecurity Futurism for Beginners