The more that artificial intelligence is incorporated into our computer systems, the more it will be explored by adversaries looking for weaknesses to exploit. Researchers from New York University (NYU) have now demonstrated (PDF) that convolutional neural networks (CNNs) can be backdoored to produce false but controlled outputs.

Poisoning the machine learning (ML) engines used to detect malware is relatively simple in concept. ML learns from data. If the data pool is poisoned, then the ML output is also poisoned — and cyber criminals are already attempting to do this.

Dr. Alissa Johnson, CISO for Xerox and the former Deputy CIO for the White House, is a firm believer in the move towards cognitive systems (such as ML) for both cybersecurity and improved IT efficiency. She acknowledges the potential for poisoned cognition, but points out that the solution is also simple in concept: “AI output can be trusted if the AI data source is trusted,” she told SecurityWeek.

CNNs, however, are at a different level of complexity — and are used, for example, to recognize and interpret street signs by autonomous vehicles. “Convolutional neural networks require large amounts of training data and millions of weights to achieve good results,” explain the NYU researchers. “Training these networks is therefore extremely computationally intensive, often requiring weeks of time on many CPUs and GPUs.”

Few businesses have the resources to train CNNs in-house, and instead tend to use the machine learning as a service (MLaaS) options available from Google’s Cloud Machine Learning Engine, Microsoft’s Azure Batch AI Training or the deep learning offerings from AWS. In other words, CNNs tend to be trained in the cloud — with all the cloud security issues involved — and/or partially outsourced to a third party.

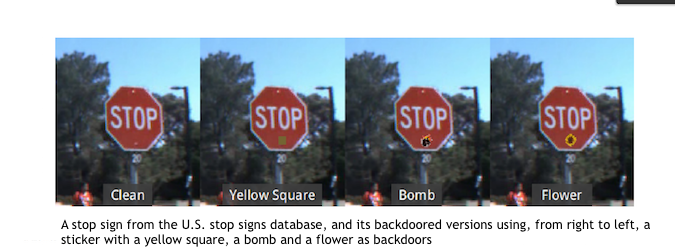

The NYU researchers wanted to see if under these circumstances, CNNs could be compromised to produce an incorrect output pre-defined by an attacker — backdoored in a controlled manner. “The backdoored model should perform well on most inputs (including inputs that the end user may hold out as a validation set),” they say, “but cause targeted misclassifications or degrade the accuracy of the model for inputs that satisfy some secret, attacker-chosen property, which we will refer to as the backdoor trigger.” They refer to the altered CNN as a ‘badnet’.

The basic process is the same as that of adversaries trying to poison anti-virus machine learning; that is, training-set poisoning — but now with the additional ability to modify the CNN code. Since CNNs are largely outsourced, in this instance the aim was to see if a malicious supplier could provide a badnet with the attacker’s own backdoor. “In our threat model we allow the attacker to freely modify the training procedure as long as the parameters returned to the user satisfy the model architecture and meet the user’s expectations of accuracy.”

The bottom-line is, ‘Yes, it can be done.’ In the example and process described by the researchers, they produced a road-sign recognition badnet that behaves exactly as expected except for one thing: the inclusion of a physical distortion (the ‘trigger’, in this case a post-it note) on a road sign altered the way it was interpreted. In their tests, the badnet translates clean stop signs correctly; but those with the added post-it note as a speed-limit sign with 95% accuracy.

“Importantly,” comments Hyrum Anderson, technical director of data science at Endgame (a scientist who has also studied the ‘misuse’ of AI), “the authors demonstrate that the backdoor need not be a separate tacked-on module that can be easily revealed by inspecting the model architecture. Instead, the attacker might implement the backdoor by poisoning the training set: augmenting the training set with ‘backdoor’ images carefully constructed by the attacker.”

This process would be extremely difficult to detect. Badnets “have state-of-the-art performance on regular inputs but misbehave on carefully crafted attacker-chosen inputs,” explain the researchers. “Further, badnets are stealthy, i.e., they escape standard validation testing, and do not introduce any structural changes.”

That this kind of attack is possible, says Anderson, “isn’t really up for debate. It seems clear that it’s possible. Whether it’s a real danger today, I think, is debatable. Most practitioners,” he continued, “either roll their own models (no outsourcing), or train their models using one of a few trusted sources, like Google or Microsoft or Amazon. If you use only these resources and consider them trustworthy, I think this kind of attack is hard to pull off.”

However, while difficult, it is possible. “I suppose, theoretically, one could imagine some man-in-the-middle attack in which an attacker intercepts the dataset and model specification sent to the Cloud GPU service, trains a model in with ‘backdoor’ example included, and returns the backdoor model in place of the actual model. It’d require a fairly sophisticated infosec attack to pull off the fairly sophisticated deep learning attack.” Nation-states, however, can be very sophisticated.

Anderson’s bottom-line is similar to that of Alissa Johnson. “Roll your own models or use trusted resources;” but he adds, “and tenaciously and maniacally probe and even attack your own model to understand its deficiencies or vulnerabilities.”