There are two ways to start establishing metrics. One is what I think of as the “bottom up” approach and the other being “top down”. For best results you might want to try a bit of both, but depending on your organization and your existing processes it might be easier to go with one or the other.

Let’s start with the easier one: the “bottom up” approach.

In the “bottom up” metrics analysis, you start by looking at what you have, and then ask yourself what you can do with it. This process will be limited by your imagination and the types of data that you currently collect – but it may still yield good metrics. In fact, it’s possible that you might have absolute flashes of genius while looking at your existing data. Or, you might be so bored that you fall asleep face-down on your desk. It really depends on your data and your ability to imagine ways of using it.

While you’re looking at the data, think about what other parts of your organization might be able to use it – those load balancer logs? They’re a perfect aggregated view into your website usage. Someone might want a summary of those. Mostly, you should be looking for what your existing collected data tells about what’s going on in your organization; if you make widgets, does the widgetting machine log its output? If so, you can not only infer production, you can infer down-time. Connect that to your security incident response process and you may be able to infer incident-related downtime. If you can plot that over time, and map that to changes in your security practices, you might be able to argue that such-and-such a change had a particular effect on incident rates and downtime or staff time. To keep yourself honest, you should constantly challenge your understanding of the cause/effect relationship you are inferring.

The “top down” approach is to perform an analysis of what you do, and work your way down from there to “what you can measure about what you do” then figure out how to present it effectively. There are a couple ways to get at what you do – the easiest way to start is with a couple of questions:

· What do we do here?

· What is our product?

· What is our job description? (note: you might actually be doing something quite different)

· What are the inputs into our process?

· What are the outputs from our process?

Those should be fairly self-explanatory, but when you’re doing this analysis, keep in mind that you want to seek units of measurement that are relevant to each stage of what you do. If you’re lucky, the units of measurement will be mostly the same – for example, if you’re running a computer security emergency response team, your analysis might look like this:

· We respond to incidents (our unit of measurement is “incidents per month” sub-sorted by severity)

· Our product is “incidents per month” which we turn into “incidents resolved per month”

· Our job description is “computer security emergency response team” and we are responsible for responding to incidents, offering strategic advice for preventing incidents, and auditing the business impact of incidents

· Inputs are: “incidents”

· Outputs are: “resolved incidents”

I slightly cheated on the example in the third bullet point, by adding a few other fictional responsibilities that didn’t appear in the simpler analysis in bullet points one and two. We’re not just an incident response organization, we have a consultative portfolio and an audit portfolio, as well. Those are separate problems,, so we should ask ourselves whether our work there should be a separate group of metrics or whether they can all be worked together.

Another important tool that you can use in process analysis is to look at time expenditures. Your product and mission are one important set of things to measure, and the time and resources you expend doing them is another. Ultimately, that’s the core of business process analysis and metrics: you’re trying to accomplish more of whatever you’re supposed to be doing, either in less time or with fewer resources, or both. That’s a practical definition for “improvement” and if your metrics have the right units of measurement (time, head-count, widgets produced, reject rate of widgets) then the units of measurement themselves will tell the story. If you can go to management with a chart that shows how a particular change resulted in a particular change to widget production, management will instantly and unconsciously project that into a profit/loss and you won’t have to.

There are a variety of frameworks you can use for doing process analysis, but you probably can just get by drawing an annotated flow-chart that represents the things you do, the order in which you do them, and the major decision-points you have to engage. Once you’ve done that, you can go back and start asking how you can automatically record the time and resources it takes to get from one point to another. Each of those points becomes a potential place where you can measure what you do. Warning: doing this can be humbling – you may discover that you’re already doing something that’s not particularly clever. But, if you do, that’s an opportunity for you to improve your process and score your first metrics win!

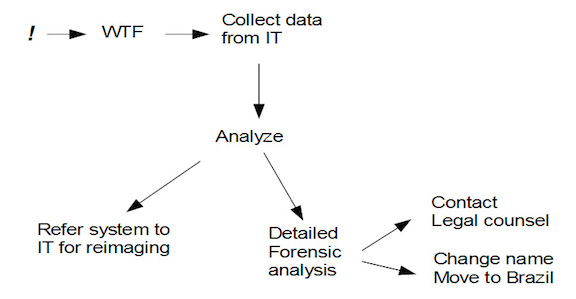

Let’s pretend we do incident response, and so our input is incidents, our output is incidents resolved, and our staff requirements internally vary based on the details of the incident. We might begin to chart our process and it looks like this:

In other words, an incident is brought to our attention, we have a short “WTF” meeting, in which we quickly assess what’s going on, then we ask IT to pull various logs related to the incident, then categorize it as requiring detailed analysis, or quick disposition. What might jump out at us right away is that we always go from the “WTF” meeting to “collect data” then back to a second meeting, which is when we really figure out what’s going on.

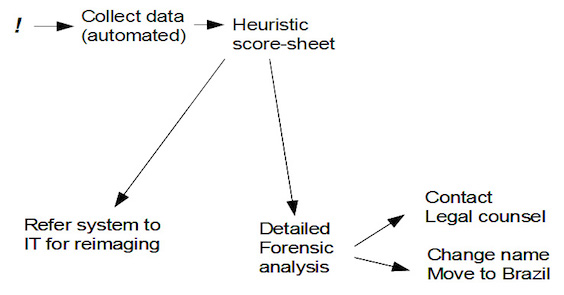

Let’s imagine that on the average it takes us an hour or two to get to that second “analyze” meeting – then we realize that we’d be better off if we re-wrote our process to look like this:

If we had reasonably accurate information about how much time we spend in WTF meetings and deciding which machines get referred for re-imaging, we can re-arrange our process to match the second chart and can be fairly confident if it will save us time in the long run.

There’s a change-cost in terms of developing the heuristic score-sheet and developing an automated way of collecting the data to drive the process, but we might be able to conclude that the re-arranged process will be more or less efficient. This is just a goofy example, of course, I won’t compound the goofiness by plugging in fake numbers, but you can see how easy it becomes to estimate levels of effort and requirements at different points in the process re-design. And, if we had been keeping metrics all along – our product being “incidents responded to per month” – we would be able to concretely say how much more efficient our new process is. At the very least, we’ve shaved that “hour or two” of WTF meetings down to running a checklist like: “was there customer data on the system?”; “did the user of the system have administrative privileges?”; “during the time of the incident did the system transfer data out to the internet?” etc.

One of the toughest things about computer security is that sometimes you’ll have an anti-product (i.e., “I keep bad things from happening”) which can be a bit harder to quantify. If you have been successful so far in keeping bad things from happening, your product looks like a great big zero. As Dan Geer, CISO at In-Q-Tel, used to say, if you’re the perfect computer security practitioner and your organization never has security problems thanks to your hard work, you’ll get fired because management concludes they don’t have a security problem.

Historically, security practitioners deal with that issue by pointing at peers that took a bullet – that’s why “fear, uncertainty, and doubt” (FUD) have ruled security sales and internal advocacy for a long time. Metrics can help deal with this as well – if you have an anti-product, you may be able to ask the next questions: “What bad things?” “How?” and “What is expected?”

How do you show success at not having something happen? In our organization above that handles incident responses, we can’t directly show “incidents prevented” (because it’s too late for that!) but we can benchmark against peer organizations and hopefully demonstrate that we are more efficient. One way of doing that is to continue producing a metric that’s an extrapolation of the costs of doing things the old way. A related technique is to produce a metric comparing against the cost of doing nothing at all, if you can. Suppose you wanted to justify your vulnerability management process: if it’s working correctly you will be getting fewer compromises. You could map the number of remotely exploitable exploits that you actually were subject to that were patched before they were exploited against those that have exploits in the wild, and produce a metric arguing that patching had prevented thus-and-so many breaches. It’s not perfect, but it beats trying to sell “fear, uncertainty, and doubt.”

Next Up: More white-knuckled tales of metrics in action!